Artificial Neural Networks

Although artificial neural networks have been around since the 1940s, until recently they have been removed from mainstream news. However, when Google Research recently began working on analyzing the information stored in the hidden layers of trained neural networks, the results (frequently called “Deep Dream”) began showing up in videos and on mainstream news and entertainment sites. Described simply, artificial neural networks attempt to emulate biological neural networks by systematically adjusting the edge weights between layers of nodes until the desired results are achieved on a set of test inputs. Once trained, neural networks are useful at identifying patterns. When used in images they can be trained to recognize certain objects or patterns within images, often with a surprising degree of success.

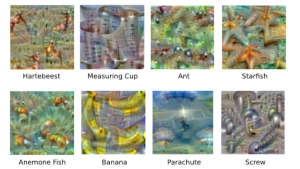

Until recently, little qualitative information was known about the actual information stored within a trained neural network. When Google Research attempted to gain a more concrete understanding of these systems they did so by feeding a trained network ‘noise images’ that consisted of random pixels and qualitatively analyzing the results. Remarkably, trained networks were able to produce images of objects they were trained to recognize from scratch.

The created images, as seen above, undoubtedly resemble the images the networks were trained to recognize. This novel insight into the abstract information stored in networks could lead to increased use of graph theory and networks in pattern recognition and other machine learning applications. In this sense, the applications of networks could extend far past what many students may expect.

More info:

http://googleresearch.blogspot.com/2015/06/inceptionism-going-deeper-into-neural.html