Chris Graham, Harold van Es, and Bob Schindelbeck, Department of Crop and Soil Sciences, Cornell University

Land application of manure creates conditions conducive for significant environmental losses of nutrients. Application of manure involves large amounts of the nutrients nitrogen and phosphorus, often resulting in excess residual levels – especially after dryer growing seasons. Losses are especially acute in the following winter and spring as excess water from snow melt and rain promotes runoff and erosion of P, leaching of nitrate, and emissions of nitrous oxide from denitrification. The latter is a significant greenhouse gas concern.

Cover crops are increasingly adopted for various purposes, including to suppress weeds, reduce runoff and erosion, build soil health, provide nitrogen (from legumes), or immobilize leftover nitrates. For manured fields, winter cover crops may have special benefits by limiting P losses through reduced runoff and erosion, and by scavenging residual N and making it unavailable for leaching and denitrification.

In this study, we tested the ability of oats (Avena sativa L.) and winter rye (Secale cereal L.) cover crops to reduce nutrient losses through multiple potential pathways during the early winter and spring season in a soil with a history of manure application. Winter rye and oats were selected due to their popularity in the northeastern USA and also for their difference in winter tolerance. Oats establish well in the fall but are winter killed in our climate, which eliminates the need to terminate their growth in the spring. Rye, on the other hand, survives through our winters and resumes active growth early in the spring. Both cover crops provide soil cover and take up residual N from the previous growing season, thereby reducing both N and P losses. We hypothesized that rye, as it growth longer into the fall and re-establishes in the spring, is more effective at reducing environmental losses than oats.

Methods

This study was conducted on a working dairy farm located in Central New York using a field with a recent history of manure application. The soil at the research site is an Ovid silt loam with 4% average organic matter content in the surface soil and pH of 7.1. During the previous three years, manure was applied in April 2008, October 2009 and April 2010 (final application before study commenced) at total N rates of 145, 170, and 100 lbs per acre, respectively.

Winter rye and oats were broadcast seeded on 24 September 2010 after corn silage harvest in a spatially-balanced complete block design at a rate of 100 lbs per acre. Along with control plots, each cover crop treatment was replicated four times for a total of twelve plots. Quadrats of rye and oats were subsequently harvested on 3 December, 2010 and analyzed for N uptake. The Roots were harvested to a depth of 6 inches. Soil samples were taken on 3 December, 14 March, 7 April, and 28 April from the 0-to-6 and 6-to-12 inch soil layers for mineral N analysis. Also, on the latter two dates soil material was collected for measurement of nitrous oxide emission potential using a method involving simulated rainfall (to induce denitrification) and 96-hour incubation at the seasonal temperatures (50oF for 7 April and 60oF for 28 April). Soil water was sampled at 20 inch depth using a tension lysimeter to determine the nitrate content.

Results

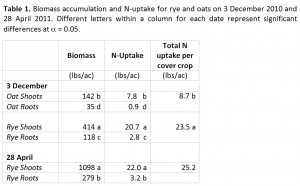

Cover Crop Biomass and N Contents

The rye cover crop produced much higher levels of biomass than the oats during the fall season after seeding, as measured on 3 December (Table 1). Aboveground biomass was three times greater in the rye plots than oats, as the former grew more vigorously and was not affected by frost kill. Larger surface biomass for rye implies that it provides greater benefits for reducing runoff, erosion, and P losses. Also, rye nitrogen uptake was 23.5 vs. 8.7 lbs per acre (269% greater) compared to the oats. On 28 April, the rye had accumulated more than twice the biomass compared to 3 December, but the total N uptake was similar (about 25 lbs per acre; Table 1).

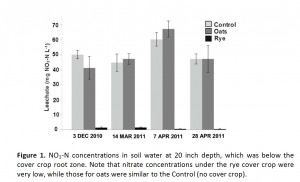

Nitrate Leaching

Cover crop effects on nitrate concentrations below the root zone (20 inch depth) were found to vary considerably (Figure 1). Rye significantly and markedly decreased NO3-N concentrations compared to the Control and Oats treatments. Concentrations under oats in fact were about the same as the plots without cover crop – basically indicating that they had no benefit for reducing leaching. Throughout the spring season, average measured nitrate levels were 43, 52, and 1 mg NO3-N L-1 for the Control, Oat and Rye plots, respectively.

Nitrous Oxide Emissions

While variability was high, both spatially and temporally, significant results were found in nitrous oxide emissions. Treatment effects changed as the spring season progressed (Figure 2). The Oats treatment produced similar results to the Control throughout the sample period while Rye decreased N2O emissions in late April after a high initial flux earlier in the month. Higher emissions were measured at the early sampling from plots with cover crops, which had a relatively fresh carbon source that promotes denitrification. Reductions in the Rye plots later in April, were presumably the result of a smaller soil nitrate pool, as the rye cover crop had taken up much of the released N. Average emissions from the Rye treatment were roughly half of the Oats treatments during the final sampling.

Conclusions

The results of this study are clear: During the winter and spring period when field N and P losses can be high, rye cover crops show great potential to mitigate negative environmental effects. The rye accumulated much greater biomass than oats in the fall, providing better winter cover to reduce runoff, erosion, and P loss potential. Rye also had a very strong positive impact on reducing nitrate leaching in the soil profile, as nitrate concentrations at 20 inch depth were extremely low throughout the sampling period. Oats showed no improvements in reducing nitrate leaching compared to the no-cover crop option.

Rye did not show reduced nitrous oxide emissions resulting from a simulated heavy rainfall event in early April, but showed a 70% decrease later in the month when it was actively taking up N and producing biomass. Oats had winter killed and therefore averaged consistently high emissions throughout the spring period.

In all, the rye cover crop had significantly greater positive effects in terms of reducing P and N loss potentials, while the benefits of the oats were minimal. Although results may vary seasonally, the winter hardy rye cover crop should be given strong preference over oats when the primary objective is to reduce nutrient losses to the environment.

Acknowledgements: This research was supported through a grant from the USDA Northeast Region Sustainable Agriculture Research and Education program. We are grateful for the collaboration of John Fleming of Hardie Farms in Lansing, NY.