PageRank: Network Theory & Google

While Google’s modern internal search algorithms are unknown to the public, the details of one of their initial search algorithms, named PageRank, are widely known. In 1999, Sergey Brin and Larry Page published a research paper titled “The PageRank Citation Ranking: Bringing Order to the Web” that described a method to determine the relative importance of web pages using network models that ultimately helped power Google’s first search engine. The algorithm’s relationship with abstract network theory from class is relatively straightfoward: each web site on the indexable internet is viewed as a node in the graph, and a directed edge from node A to B indicates that webpage A has an outgoing link to webpage B. The goal of the PageRank algorithm is to quantify the relative importance of each node in the network based upon the number of other nodes that link to it.

The PageRank algorithm is conceptually centered around the idea of a “random surfer” browsing the internet. This surfer begins at a single web page and follows random on-page links to further web pages, thereby slowly exploring the entire network. When the surfer grows bored he is randomly transported to another website, which ensures that he will eventually visit all websites, even if they don’t have any inbound links. The basis of the PageRank algorithm is that the proportion of time this random surfer spends on a single web page is correlated with that web page’s importance; in other words, the more inbound links a web page has (which would cause the random surfer to more frequently visit it and therefore spend more time there), the more important the web page is.

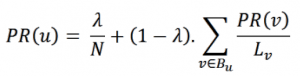

Mathematically, the PageRank of a single web page is defined as:

The variable N represents the total number of pages in the network, PR(u) represents the PageRank of node u, Lv represents the number of outgoing links found on page v, and Bu is defined as the set of pages that link to page “u”. The lambda variable is referred to as the “damping factor”, which represents the probability that the surfer will randomly jump to a brand-new page. Conceptually, to determine the PageRank of a page “u”, the formula first finds all pages that link to “u”; it then takes the PageRank score of each such page “v”, divides it evenly so it can be shared by all pages “v” links to, and counts one “slice” of the score towards “u”‘s score. Therefore, links from a webpage with a higher PageRank score have more impact than links from a webpage with a lower PageRank score.

To compute the PageRank for all nodes in a graph, the algorithm begins by giving each node a default PageRank, usually 1/N. The algorithm then begins by using the formula above to give each web page a new PageRank. Note, however, that the new PageRank for each node depends on the PageRank of other nodes; consequently, once the algorithm is finished giving each page a new PageRank, the values are already outdated as they were calculated using the previous set of PageRank values. Therefore, the algorithm continuously recomputes the PageRank, each time making the values slightly more accurate, until the values barely change from one iteration to the next. This point of convergence takes surprisingly few steps: for example, a network of 300 million links usually achieves convergence in less than 50 iterations.

When the process is over, each node is left with a value that corresponds to how important it is in the network, which can then be used to help rank websites in search engine results. While this concept was heavily used by Google’s search engine early on, the weight placed on a website’s PageRank has gradually diminished year-by-year as Google discovered new, more effective ranking measures. The key difference between PageRank and these newer methods is largely based on what the methods inherently examine: while PageRank solely considers a website’s relative importance, newer methods more closely examine the semantic similarity between websites and the search query, therefore placing more emphasis on what the user is truly searching for instead of the reputation of the website. Further issues, such as PageRank’s indirect encouragement of web spam, have lead to the slow demise of the algorithm that helped Google gain initial dominance in the marketplace. Regardless of its current usage, however, the PageRank is an interesting example of how basic network theory can be used in incredibly useful real-world applications.

Sources:

http://ilpubs.stanford.edu:8090/422/1/1999-66.pdf

http://infolab.stanford.edu/~backrub/google.html