Network optimization in the human brain

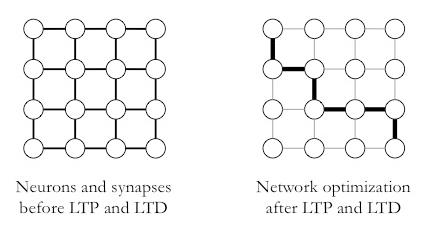

The human brain is analogous to an elegant network of connections: the nodes are neurons, the edges are synapses (the spaces between neurons that propagate electrical signals via chemical neurotransmitters), directionality exists (actions potentials propagate along axons and end at dendrites), and connections may be positive or negative (excitatory or inhibitory synapses). These properties – nodes, edges, directionality, and relationships – are fixed once development is complete. But there is one property of neurons that may change over time: connection strength. The strength of connections in the brain is constantly updated and mediated by the process of learning. This property, known as synaptic plasticity, allows neural networks to become optimized so that the most frequently used pathways become stronger, whereas less important pathways become weaker.

In this paper, Achler and Amir discuss the shortcomings in artificial intelligence when compared to animals’ natural ability to learn via synaptic plasticity. By creating an algorithm to carefully assess and assign weights to patterns, however, principles of animal learning could be applied to artificial intelligence to facilitate learning processes. According to the principle of Hebbian learning, named after Canadian psychologist Donald Hebb in 1949, “what fires together wires together.” Later, this theory was developed to explain synaptic plasticity, which occurs whenever two neurons are stimulated simultaneously in rapid succession. If the connection between these neurons strengthens, the change is labeled long-term potentiation (LTP); if the connection weakens, the change is labeled long-term depression (LTD). By selectively strengthening or weakening pathways, the flow of information is shaped within the neural network. This reinforcing feedback is the mechanism by which associative learning and memory consolidation occur.

Initially, the connection between two neurons is held at a baseline level – it is neither strong nor weak. When two neurons are activated simultaneously and repeatedly, known as tetanic stimulation, the threshold for LTP is reached and the synaptic weight – or connection strength – becomes stronger. Once a synapse has increased from a baseline to a strong connection, the threshold for future activation is lowered and that particular pathway is favored for a period of hours or longer. Conversely, LTD is an opposing process by which neuronal synapses are selectively weakened. The processes of LTP and LTD work together to optimize learning processes, regulate memory formation and decay, and streamline information flow efficiently throughout the brain. This optimization of information flow can be found in almost every other conceivable network, including the paths between Internet users and web pages, connections among individuals in social networks, and utilization of learning algorithms to improve artificial intelligence.

Source: “Neuroscience and AI Share the Same Elegant Mathematical Trap” by Tsvi Achler and Eyal Amir, University of Illinois at Urbana-Champaign.

http://reason.cs.uiuc.edu/tsvi/Elegant%20Math.pdf