Bayes Theorem in Machine Learning

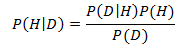

Bayes’ Theorem is the fundamental result of probability theory – it puts the posterior probability P(H|D) of a hypothesis as a product of the probability of the data given the hypothesis(P(D|H)), multiplied by the probability of the hypothesis (P(H)), divided by the probability of seeing the data. (P(D)) We have already seen one application of Bayes Theorem in class – in the analysis of Information Cascades, we have found that it is possible for rational decisions to be made where one’s own personal information is discarded, based upon the conditional probabilities calculated via Bayes’ Theorem.

Such a powerful concept, and succinctly summarized in the following formula,

, Bayes’ Theorem is the basis of a branch of Machine Learning – that is, of the Bayesian variety.

The tautological Bayesian Machine Learning algorithm is the Naive Bayes classifier, which utilizes Bayes’ Rule with the strong independence assumption that features of the dataset are conditionally independent of each other, given we know the class of data. We can apply this to spam filtering, which is the focus of this blog.

In spam filtering, we would like to classify messages as spam or non-spam. We first learn a decision rule, then apply this rule to new incoming messages. The essence of Naive Bayes is in the model creation process. To model the probability of a word, “w”, Naive Bayes makes the important assumption that all words, denoted by the vector is conditionally independent the class of the message (spam or non-spam). That is, if the message is spam, the word “Nigerian” and the word “Prince” are conditionally independent. This classifier is aptly named – it is naive to assume this conditional independence.

But given this conditional independence, we can write the probability of a message being spam as

The conditional independence assumption gives a very elegant formula above, that at its core, is a repeated application of Bayes’ Rule, for each word in the message. This is called the multivariate Binomial Naive Bayes Classifier, and from research by Metsis, Androutsopoulos et. al, we find in real life spam classification problems, it averages a recall rate (proportion of actual positives correctly classified) of 93%.

As an extension of Bayes Rule, we introduce the Multinomial Naive Bayes model to the spam filtering problem. Instead of having the words being independently chosen in a binary fashion, we let each word be chosen from a multinomial distribution that contains the entirety of words seen in examples. Thus, this application of Bayes Rule is in a slightly different context – each word in the message is now conditionally independently generated from a “bag of words”, where this bag contains all the words seen in examples and the condition is the same as before, denoting whether the message is spam or not spam. The researchers obtained an average recall of 97%, a significant improvement over the Binomial Naive Bayes.

As seen both in the information cascade networks seen in lecture and its application in Machine Learning in the form of Naive Bayes, Bayes Theorem is a fundamental formula core to our everyday life.

Joyce, James, Bayes’ Theorem, <http://plato.stanford.edu/entries/bayes-theorem/>

Ghahramani, Zoubin, Bayesian Machine Learning, <http://mlg.eng.cam.ac.uk/zoubin/bayesian.html>

Manning, Christopher, Naive Bayes Text Classification, <http://nlp.stanford.edu/IR-book/html/htmledition/naive-bayes-text-classification-1.html>

EDIT: Forgot to sign blog. (Sorry)