Cause and prevention of cascading failure in distributed computer systems

https://www.infoq.com/articles/anatomy-cascading-failure/

Large tech companies usually maintain a fleet of servers to serve the customer’s requests, so that if a few, or even a thousands of server go down, there will still be enough backup servers to take up the roles to ensure that the service doesn’t appear to be down to the end customers. Therefore, you might be quite surprised that sometimes even Google and Facebook have their service being unavailable for hours, given the large number of backup and decade of technical expertise they have. However, if we think about this problem in terms of network cascade, we can see that some unlucky sequence of events can turn the mechanism to prevent service downtime against itself.

Cause

We can model tech companies’ server fleet as a network of computers. Computers are the vertices in the graph. An edge between two computers mean that they can communicate directly to each other. For the purpose of this problem, we mainly focus on one aspect: suppose there is an edge between A and B, then when A fails, then a fraction of traffic can be re-routed to B. (The exact fraction depends on the number of neighbors of A) Of course, a server cannot handle infinite number of requests. When the server is handling too many requests, in our case, handling too many requests that are re-routed from other servers, it might become unresponsive, appear dead, and get killed by some distributed system coordinator.

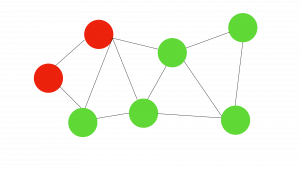

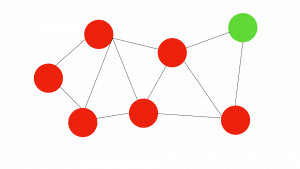

Now we can look at some concrete example, modeled in the language of cascading. A server has two possible behaviors: either live (L) or dead (D). Let’s assume that if two of the neighbors of a server died, then the server will also be overwhelmed and die shortly. In addition, we have the following graph:

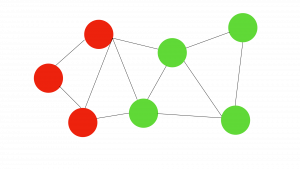

Now suppose under some unlucky scenario (maybe you are performing some updates to some system that reduces its serving capacity, or you suddenly received a lot of new requests due to sudden increase in popularity), two servers have died. In the following graph, they are marked with red.

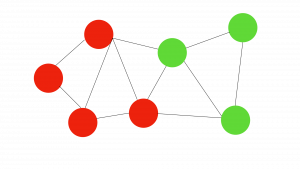

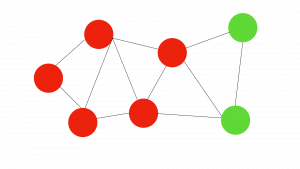

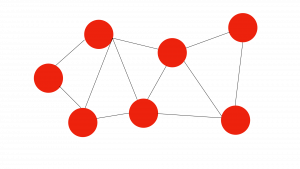

Now using the cascading rule, you can see that the entire system will be gradually taken down:

Of course, if you are a human/robot coordinator of the system, you don’t want the cascading effect to happen. Therefore, when you see the first two servers down, you might try to restart them. However, the restart will take time, and by the time they are completed, you might already be in this scenario, where the servers just restarted will be overwhelmed by neighbors’ request again.

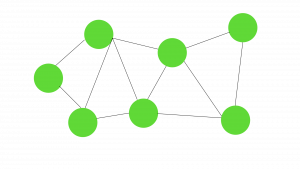

Therefore, as the linked article said, the quickest way to recover from this, quite unintuitively, is to completely shut down the system and restart simultaneously again. Although it might cause downtime worldwide, it avoids doing futile partial restart work that will be quickly undone by cascading effect.

Prevention

In the linked article, it mentions that re-routing requests to nearby server is an antipattern. From the user experience perspective, it might be counter-intuitive, because this ensures that user will be still be served by the closest available server to reduce data transmission time. However, this practice can easily enable the cascading behavior, since a local cluster of failure can easily cause the cascading effect, and numerous far-away servers can do nothing to mitigate it. Thus, to reduce the likelihood of such failure, we should also connect some far-away servers together.

The article also mentions that we should try to reduce startup time. In this model, it can help us avoid a complete shutdown, because if we can restart servers fast enough, we will be able to recover just enough capacity in time to avoid further cascading.