Audiovisual Preservation Conferences Recap

International Association of Sound and Audiovisual Archives (IASA) Annual Conference 2016

Tre Berney and Karl Fitzke represented CUL at this year’s International Association of Sound and Audiovisual Archives annual conference, held at the Library of Congress in Washington, DC. Karl is serving as a reviewer for IASA Journal and Tre participated in two panel sessions as part of the program. We began the week by attending the closed Technical and Education Committee meetings on Sunday at the Smithsonian’s National Museum for the American Indian. Topics discussed by the committee included emerging technologies in video analysis, including “waveform-style” visual tools for video, interdisciplinary languages for security, and curriculum design for small, cultural heritage archives with AV holdings.

The programming included talks on strategies for file validation and packaging strategies, the ongoing development of open source tools, Open source codec and wrapper developments for file types, preserving supplemental to AV materials, cultural curation, connecting archives to communities, anticipating user requirements, managing DPX film scans and expectations and much more. While Cornell Library AV preservation is advanced, managing the digitally produced content is still a struggle and we’re working closely with CUL-IT and IT@Cornell on strategies and solutions. Tre spoke about collaborations between IT and AV preservation units at IASA, along with Erica Titkemeyer (Southern Folklife Center – UNC), David Ackerman (Harvard), and Jon Dunn (Indiana University). (http://2016.iasa-web.org/promoting-it-and-av-preservation-collaboration-university-libraries)

Tre also spoke about the Cornell Hip Hop Collection in the context of preserving cultural movements and fandom along with John Bondurant and Jeremy Brett (Texas A&M), and Dr. Francesca Coppa (Muhlenberg College). (http://2016.iasa-web.org/remix-culture-archiving-and-preserving-transformative-works-media-fandom-and-hip-hop-cultures)

It was another really solid week with some of my favorite colleagues from around the globe with a focus on global cultural heritage and human record. I look forward to the publication of the TC-06, IASA’s next Technical Committee report, focused on recommended standards for video preservation. They remain an important resource for our work here at CUL.

Association of Moving Image Archivists Annual Conference 2016

Earlier this month the AV team (Tre, Karl and Desi) attended the Association of Moving Image Archivists (AMIA) annual conference in Pittsburgh, PA. The conference coincided with the results of the presidential election which set the stage for conversations about diversity and inclusion within the organization and advocating for better representation of the same within archival collections. To that purpose, a mini-symposium was held on community archiving and how to serve organizations without resources to adequately care for their materials. In line with this type of outreach, Desi participated in a workshop where AMIA members helped to process AV collections and educate local organizations on media preservation.

This year we worked with three Pittsburgh organizations: City of Asylum, a group that provides sanctuary to exiled authors, publishes their work and holds events for them to share it; Attack Theatre, a group that makes art accessible by bringing it to under-served communities; and David Newell, better known as Mr. McFeely from Mr. Rogers’ Neighborhood, to help inspect and process his collection of films.

Karl and Desi also participated in a workshop on digital preservation which gave an introduction to command line interface and open source tools used in audiovisual digitization and file storage. This type of technical know-how is exceedingly important as we migrate deteriorating AV formats to digital ones that need an increased level of care as technology constantly advances. There were many sessions that dealt with metadata, access, and collections policy and even a session on how archivists plan to address the increasing amount of police body-cam footage and its implications. All in all, it was an eventful and productive time and we are happy to be a part of an organization actively caring for cultural heritage and conscious of the diversity it represents.

Tre Berney and Desiree Alexander, Digitization and Conservation Services, DSPS

Writing Accessible Web Content

In Digital Scholarship & Preservation Services, we typically run our websites through an accessibility checker before they are launched, to ensure that sites can be accessed by all users, including those with disabilities (whether visual, hearing, motor or cognitive).

While we can make our sites accessible by using good front-end coding practices, content writers can also help us clear accessibility errors. In Tips on Writing for Web Accessibility, W3.org outlines some simple ways that you can write more accessible content:

- Provide informative, unique page titles

- Use headings to convey meaning and structure, and use them in the proper order (H1, H2, H3, etc.).

- Make link text meaningful (e.g. avoid “click here”)

- Write meaningful text alternatives for images

- Create transcripts and other captions for multimedia

- Provide clear instructions and avoid technical language (e.g. when writing help text)

- Keep content clear and concise

Check out Top 10 Tips for Making Your Website Accessible for additional accessibility tips, and Tips on Writing for the Web for general advice on writing for the web.

Exciting Times for arXiv: Grants from the Sloan Foundation and the Allen Institute for Artificial Intelligence Usher the Next-Gen arXiv Initiative

We are pleased to receive a $450,000 grant from the Sloan Foundation and $200,000 from the Allen Institute for Artificial Intelligence (AI2) to start establishing a new technical infrastructure for arXiv. The funds from the Sloan Foundation will initiate the design of a modern, scalable, cost-efficient, and reliable technical infrastructure for arXiv. The additional support from the AI2 will allow us to focus on innovation and new features to strengthen quality control workflows and tools that make arXiv a highly-regarded service.

arXiv, a globally-used and integral tool for disseminating research findings in the physical sciences, relies on a 25 year-old code base in urgent need of modernization. As part of its 25th anniversary vision-setting process, the arXiv team at Cornell University Library conducted a user survey in April 2016 to seek input from the global user community about arXiv’s current services and future directions. We were heartened to receive 36,000 responses from 127 countries, representing arXiv’s diverse, global community (see the survey findings). The prevailing message is that users are happy with the service as it currently stands, with 95% of survey respondents indicating they are very satisfied or satisfied with arXiv. Furthermore, 72% of respondents indicated that arXiv should continue to focus on its main purpose, which is to quickly make available scientific papers, and this will be enough to sustain the value of arXiv in the future. Based on the conclusions the 25th anniversary vision-setting process, we anticipate that a multi-phase design and development of a next-generation arXiv (arXiv-NG) will require approximately 3 years with a $3+ million budget, including requirements specification, evaluation of alternate strategies and partnerships, design of a new system architecture, assessment, and deployment.

The funds from the Sloan Foundation enables us to begin the first phase to transition the arXiv service into a modern, extensible, and flexible architecture. We will create a comprehensive plan that factors in a range of issues extending from architectural choices to sustainability requirements, and from policy issues to governance matters. An integral part of this initiative will be networking with other related initiatives and striving to serve the broader scientific community. The arXiv-NG team includes Jim Entwood (Operations Manager), Martin Lessmeister (Lead Developer), Sandy Payette (CTO), Oya Rieger (PI & Program Director), and Gail Steinhart (arXiv-NG Project Coordinator).

The funds from the AI2 will enable us to establish a collaboration between the Cornell University Library and the Cornell Computing and Information Science (CIS). We will hire a Research & Innovation Fellow to partner with the arXiv team in designing and integrating a series of updated, research-oriented features for arXiv. The Fellow will be an experienced software engineer who will split his/her efforts between conducting research as a part of the CIS team and working closely with the arXiv staff at CUL on conceptualization, testing, and deployment of the new modules. This CUL/CIS collaboration will enable the integration of tools emerging from research into the production system to improve user and moderator experience.

The ultimate goal is ensuring that arXiv continues to serve its essential role in facilitating science based on the needs of the user community, as well as responding to evolving scholarly communication practices. We are excited about initiating the arXiv-NG project and look forward to providing you with updates.

Oya Y. Rieger

on behalf of the arXiv team

News from eCommons, CUL’s General Purpose Institutional Repository

On behalf of the eCommons team, I’d like to share some news on new services for eCommons users, and some other things we’ve been working on. My apologies for being slow to recognize the need and the opportunity to communicate news of this kind to you. For the most part, I’ll communicate eCommons news by forwarding to CU-LIB the communications that I send to eCommons collection coordinators, and I’ll include the first of those updates in this post. AND you might wonder, what is a collection coordinator?

eCommons collection coordinators have some level of administrative responsibility for one or more collections in eCommons. Not every collection has a coordinator, but many do. Since this hasn’t been well-documented in the past, we’ve recently started trying to identify collection coordinators, and to document the administrative details of the collections for which they’re responsible. Examples of the types of type of collection information we’re after include whether a collection has any limitations as to who may submit to it, whether a collection includes student works and how FERPA releases are handled, whether and how deposit to the collection is mediated by eCommons staff, and whether the collection coordinator has been made aware of eCommons policies and recommended best practices. This effort is moving along slowly but steadily, beginning with eCommons users with whom we’re in the most frequent contact, users who contact us with questions or issues related to a particular collection, and with the requestors of new communities and collections. There is wiki to which coordinators have access, and a very low traffic email list. There isn’t a lot to see on the wiki because collection information is limited to the people associated with the collection, but for an idea of what we’re trying to track, you can view the Test managed collection. If you’re reading this and would identify yourself as a coordinator for one or more eCommons collections, please get in touch with us!

Now about the updates to collection coordinators. Our first one was about managing FERPA releases, and here that is:

= = = = = = = = =

Greetings,

This message is being sent to eCommons coordinators, individuals who are listed as a contact or coordinator for one or more collections in eCommons. Our intention is to use the list to notify coordinators of any significant changes to eCommons, substantial service interruptions, and news regarding services and policies. We anticipate traffic on this list will be low. If you have received this message in error, please contact me.

Here’s our first news item for this list, for programs or units whose students submit work to eCommons. While it is the responsibility of those units to secure and retain FERPA releases, we have taken a couple of steps with eCommons to make this simpler, depending on who completes the submission process:

- Student submissions: we have updated our eCommons license to include a FERPA authorization statement. When students submit their own work to eCommons, if they complete the submission themselves and accept the license, they authorize Cornell to make the work publicly accessible online.

- Proxy submissions: if anyone other than the student completes the submission, the program is responsible for obtaining a FERPA release, whether it is program staff or library staff who complete the submission. Historically, programs have had students complete paper FERPA release forms, and have retained those documents. That’s fine and programs may continue to do that, but we now also offer the option of storing a PDF of the signed release form with each work in eCommons. Stored release forms are not visible to the public. If you are interested in using this option to manage retention of FERPA releases for student works, please contact ecommons-admin@cornell.edu and we’ll work with you to accomplish this.

We hope these options will simplify things for those of you who choose to use them.

Best wishes,

Gail

= = = = = = = = =

You can expect a few more updates to come soon – in the meantime pleased don’t hesitate to contact the eCommons team with any questions, ideas, or concerns.

eCommons team: Mira Basara, Christina Harlow, George Kozak, Wendy Kozlowski, Chloe McLaren, Gail Steinhart

2016 Chinese Institutional Repository Conference

On September 21, 22 & 23 I represented arXiv at the 2016 Chinese Institutional Repository Conference at Chongqing University. China’s use of arXiv is rapidly growing; they are now 2nd to the US in terms of arXiv site traffic. This was a great chance to meet with librarians from Chinese Universities as well as from the Chinese Academy of Sciences to give an update on arXiv and discuss potential collaborations.

Among the presenters there were many common threads: the desire to reduce library costs; motivating researchers to post to repositories; interoperability; standardizing meta-data; various software options used among different institutions and challenges to keep software updated. One of my favorite talks was by Dr Alan Ku who focused on the psychology of the repository and the libraries ability to engage researchers. The three main ways to get researchers to use repositories he said was with a pistol, money, or education. However the librarians do not have pistols. Nor do they have money to offer the researchers. Education he pointed out takes a long time. Alan emphasized the importance of training data librarians that can find ways to connect with researchers on the meaning and value of the repositories. I also learned a great deal from the other invited speakers about open access, metadata harvesting, research data management, and IR approaches in other countries that have more centralized approach than the US.

With regard to arXiv I had assumed everybody in the audience was familiar with what arXiv was and so my talk focused more on updates, future plans, statistics, and behind the scenes operations rather than giving a clear definition and overview. Most of the speakers referred to arXiv during their presentations including those that were given in Chinese. However based on a couple of the questions there is still a need to clarify what arXiv is, the role it can play as a tool for these libraries, and the distinction between arXiv as an international resource versus Cornell’s own institutional repository. Fortunately the conference organizers had prepared a beautiful 100 page brochure about arXiv that was translated into Chinese and handed out to all the 300+ attendees. After my talk I met with the Chinese arXiv service group and had some interesting discussions about quality control of articles, outreach to researchers, the emergence of various “Xiv”s, and how libraries can support arXiv.

The food provided by the conference hosts featured local cuisine called a ‘hot pot’ which features a central bubbling vat of intensely spicy broth full of peppers. Around this we were served a variety of options that you cook yourself in the hot pot before eating so that you can vary the time and intensity (at least somewhat). The vegetables, tofu, and fish was excellent. I was not as adventurous as the others with the duck intestine and chicken feet. One of the invited guests accidentally bit into a hot pepper and had his tongue go numb for the rest of the day.

A personal account of the trip with additional photos is at: http://www.entwood.org/jim-china-2016/

- Touring Dazu Rock Carvings

- Dazu Rock Carving

- At Dazu

- Hot pot in center of the table over an open flame. Bottom centered are the duck intestine.

- Forbidden City

- Great Wall

- Tiananmen square

- Summer Palace

Scholarly Communication Working Group Sprint to Develop Author Rights Resources

Outreach to promote effective author rights management was identified as a high priority project for members of the Scholarly Communication Working Group (SCWG), and the Library Directors Leadership Team also raised this as an important issue in their introductory meetings with Director of Copyright Services Amy Dygert and Scholarly Communication Librarian Gail Steinhart. The SCWG turned its attention to this topic this past summer, issuing a call for volunteers, and forming an author rights outreach team: Jim DelRosso and Ashley Downs (co-leads), Amy Dygert, Kate Ghezzi-Kopel, Lara Kelingos, Melanie Lefkowitz, Gail Steinhart, and Drew Wright.

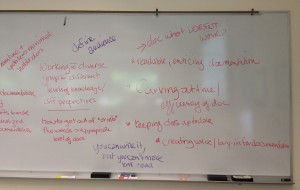

We had two main objectives: to develop a collection of materials for librarians to use when counseling faculty, grad students, and other scholars on maintaining their own rights as authors, and to accomplish the work in an efficient (and hopefully fun) way. We’ll present the resulting resources at the October 2016 Reference and Outreach Forum, so I’ll describe them only very briefly here, and then describe our approach.

First, the resources, all of which are accessible via a new page on the Liaisons@CUL wiki: author rights outreach resources for liaisons:

- A new library guide – Because author rights are not treated thoroughly in any existing Cornell Library guides (though some brief references do appear), we decided to develop one dedicated to the topic: http://guides.library.cornell.edu/authorrights.

- A toolkit for writing to faculty or departments about author rights – The toolkit includes guidance for library staff when crafting a message, boilerplate language organized by topic, and sample correspondence to adapt and use.

- A slide deck (PowerPoint) with suggestions and notes, designed for an approximately 10-minute presentation to faculty or graduate students.

How did we do it? A few of us have worked on projects or groups that chose to focus their work into a single (or few) longer working sessions (think “sprint” from agile software development), rather than approaching work in a more traditional committee fashion. We’ve found those experiences to be productive and rewarding, and the work gets done pretty quickly and efficiently.

A little planning goes a long way to make a sprint like this productive, so we decided in advance what we wanted our deliverables to be, what key concepts we should cover, what preparatory work should be done (i.e. setting up a new, empty library guide), and shared and reviewed background materials via Box. Ashley and Jim developed an agenda for the day, planning to divide us into two working groups, one focused on the library guide and one focused on the other resources. We started with coffee and treats that people brought (important!), and broke our day into four 90-minute blocks. For each block, the first 60 minutes were reserved for work, and the final 30 for reporting back and discussion among all participants. People were allowed to change groups mid-way through the day.

By the end of the day, we were nearly done. While most materials needed a little further refining, and we wanted to seek feedback from prospective users of the materials (primarily liaison librarians), we were very pleased with what we’d accomplished. Granted, it took us a few weeks to put the finishing touches on everything (N.B.: make sure your follow-up plan is solid and time-bound!), but it hasn’t taken weeks of actual work to finish this off. Overall, we felt this approach worked very well.

We enjoyed the process, and we hope you find the results useful!

Signed, the author rights outreach team (Jim DelRosso and Ashley Downs (co-leads), Amy Dygert, Kate Ghezzi-Kopel, Lara Kelingos, Melanie Lefkowitz, Gail Steinhart, and Drew Wright)

Digital Curation Services at Code4Lib NYS 2016

Code4Lib NYS 2016 Unconference was held at Cornell’s Mann Library, Thursday & Friday, August 4-5, 2016. The Digital Curation Services team (Mira Basara, Dianne Deitrich, and Michelle Paolillo) attended both days, and found many opportunities to sharpen our skills in the service of digital curation, as well as opportunities to network with colleagues beyond Cornell. The three of us had different interests in attending the unconference, as noted below. Our varied perspectives reflect the nature of digital preservation itself: how it is integrated with the many other activities of the digital life cycle, and the broad range of skills that come into play in the service of long-term assurance for our digital assets.

Mira: I was very impressed with the number and quality of workshops and sessions offered on Code4Lib. I attended two mornings of a workshop called “Command Line Interface Basics” led by Francis Kayiwa (Virginia Tech). The workshop covered the user and programming interface of the UNIX Operating System. Even though I have been using UNIX Shell for years my knowledge was spotty and this workshop really filled gaps that I have been missing, such as different ways to edit files or filter files, and communication and file archiving. Another interesting session was Introduction to Hydra, which again provided a great insight into ActiveFedora based model. I feel this will give me better insight of the overall system. Having a basic understanding of the model will help me if my work leads me to use or administer Hydra-based systems.

Dianne: When I saw a hands-on Fedora 4 workshop advertised on the Code4Lib schedule, I knew I had to be there. I’m the sort of person who learns best by diving right into using a particular system. The Fedora repository software has always seemed a bit mysterious to me; I’ve pulled content from a Fedora 3 repository through my past work with electronic theses and dissertations, but beyond that, I wasn’t really sure of its inner workings. Our instructors, Esmé Cowles (Princeton University) and Andrew Woods (Duraspace), were great — and in addition to providing a high-level overview of Fedora, they provided an introduction to the world of resource modeling, access control, and Apache Camel integration. I was really impressed by how accessible they made the content, and how often they checked in with us to make sure that nobody was lost or too far behind. While I might not be working with Fedora repositories directly, these workshops provided some invaluable context that I can use to understand our own repository infrastructure.

Michelle: I was happy to spend much of the conference in the Write-The-Docs inspired workshop sharpening my documentation skills. Cristina Harlow (Cornell) and Gillian Byrne (Ryerson) led us in a well-designed experience through both morning sessions. Together we explored possible structures of documentation, and defined the elements that are necessarily included in complete documentation. Then we shifted to examples of documentation in an open critique. We shared examples of documentation we had all run across, explaining what we liked, or did not like, and possible ways to improve them. After this we had opportunity to work on improving our own documentation. I worked on making some templates in the CULAR wiki, such that when we add new collections, the template already has prompts for the information that should be captured. We are encouraged to share our documentation and templates back to the Write-the-Docs community. Over all, I feel this session has helped me streamline the process of documentation, and to clarify my focus as I write.

The keynotes themselves were powerful reminders that our efforts exist in a context of humanity and ethics. Patricia Hswe’s address served as a reminder that sound relationships among project and service teams are just as important to the project or service success as the technology components (and fundamentally so). She reminded us that the emotional labor of listening to and acknowledging the needs of stakeholders is important for building successful systems; the degree to which we can become comfortable with uncertainty is the same degree to which we can influence the outcomes of our projects for the better. Tara Robertson challenged us to consider a broader range of ethical questions when making content available. We are all familiar with the notion that the ease of making content available digitally may deceive us as to the legality for doing so. But even when legal right is assured, harm can come to communities if we insist on our right to provide unfettered access, especially to minority communities that may be missing from the conversation that informs any such decision. Through several examples, she challenged us to transform our profession; to ask not only whether we had legal rights to provide access, but to engage in the ethical question of who we might harm by exercising these rights. These two keynotes were reminders of the reach of the impact as we preserve content and make it available; our mindfulness in this realm can help steer us towards better waters as we navigate the digital age.

We are indebted to Christina Harlow for a well-organized, engaging conference that was so conveniently located. She will say that she had a lot of volunteer help; doubtless this is true, but it is also true that the success of this conference is largely due to her initiative and follow-through. This conference provided us with a conveniently located context to sharpen our skills and add tools to our “digital curation toolbox”. Many thanks!

Findings of the 2CUL Study on Developing e-Journal Preservation Strategies

Faculty and students have increasing dependency on commercially-produced, born-digital content that is purchased or licensed. According to a recent Ithaka S+R study on information usage practices and perceptions, almost half of the respondents strongly agreed that they would be happy to see hard copy collections of journals discarded and replaced entirely by electronic collections (see Figure below). This strong usage trend raises some questions about the future security of e-journals and if and how they are archived to ensure enduring access for future users. Evidence indicates that the extent of e-journal preservation has not kept pace with the growth of electronic publication. Studies comparing the e-journal holdings of major research libraries with the titles currently preserved by the key preservation agencies have consistently found that only 25-30%, at most, of the titles with ISSN’s currently collected have been preserved.

With funding from the Mellon Foundation, during 2014-2015 Columbia and Cornell Universities (2CUL) conducted a 2-year project to evaluate strategies for expanding e-journal preservation. The project team included Shannon Regan, Joyce McDonough, Bob Wolven (co-PI) from Columbia University Libraries and Oya Y. Rieger (co-PI) from Cornell University Library. It was a follow-up study to expand on the results of a Phase 1 2CUL project that looked into a number of pragmatic issues involved into deploying LOCKSS and Portico at Cornell and Columbia. The key research questions of the Mellon-funded study included: What is not being preserved?; Why are they not being preserved?; and How do we get them preserved? The purpose of this blog is to share some of the key recommendations and highlight challenges faced during the study. The report that describes the methodology and findings of the study is available on the project wiki.

Recommendations for Further Action

Major Publishers: As libraries and licensing agencies negotiate new licenses or renew existing licenses, publishers should be asked to specify any licensed content excluded from the license’s provisions for archiving. We need to engage CRL to explore how the recently revised model license can be further enhanced by broadening the archival information section.

Ensuring Continuity: The preservation status of e-journal titles may change as titles move from one publisher to another. The Enhanced Transfer Alerting Service maintained by the UKSG provides information that could be effectively used to monitor such changes.

Open Access E-Journals: Freely accessible e-journals comprise the largest, most diverse, and in all likelihood most problematic category for preservation. Columbia and Cornell will work with members of the Ivy Plus group of libraries to assess the feasibility and cost of implementing a Private LOCKSS Network to preserve the pilot collection developed in Archive-It.

Technical Development: As digital formats become more complex and new research methods emerge (e.g., text mining), just-in-case dark archiving solutions will be harder to justify from cost-effectiveness and return-on-investment perspectives. It will be beneficial for the stakeholders to reconsider the current assumptions that underlie significant initiatives such as CLOCKSS, LOCKSS and Portico.

Information Exchange: At present, up-to-date information about preservation status is not included in the systems and knowledge-bases libraries use to manage e-journal content (although the Keepers Registry has significantly enhanced the ability to query the preservation status of individual titles). This inhibits libraries’ ability to consider preservation as a factor in collection development and collection management.

Setting Priorities: One barrier to effective action has been the sheer number of e-journal titles that are not preserved. More discussion among libraries is needed to build consensus around priorities for action on titles provided through aggregators and on freely-accessible e-journals.

University/Library Publishers: University libraries engaged in publishing should develop a consistent approach to preservation, including open declaration of their archiving policies and practice. This work should help to inform, and be informed by, CRL’s exploration of a “TRAC light” certification.

Challenges

The project team’s most significant impediment was simply the time required to explain the purpose of the project, including libraries’ expectations and needs regarding preservation of e-journals, to many parties with diverse backgrounds and perspectives. Publishers, editors, and aggregators each had different degrees of awareness of issues, but also different understanding of the meaning of terms such as “preservation” and “archiving.” Adding to this challenge was the fact that preservation is not the highest priority for most of the parties we worked with.

Perhaps the most surprising challenge was the degree of questioning we encountered within the library community itself regarding the importance of taking action to preserve e-journals. This was expressed as a combination of (in our view, misplaced) confidence that publishers and aggregators can be relied on to archive their own content, plus doubts about the technical and economic reliability of existing third-party preservation agencies. The reluctance from librarians to aggressively pursue e-journal preservation may be influenced by confusion as to where the responsibility for preservation lies: with publishers, third party agencies, or libraries.

Individual libraries, despite their concern for preservation, often lack effective means for taking action. Selection and acquisition processes may not involve any direct interaction with the publisher; many titles are acquired as parts of large packages, with no comprehensive provision for preservation. While stewardship of print journals was recognized as a core function of libraries, today commercial publishers provide access to digital content and manage content. Preservation, formerly a distributed activity for printed material controlled at the local level, has come to rely on centralized infrastructures and action in the case of digital material, without clearly defined roles for those staff charged with responsibility for preserving library collections. Some libraries have sought to include provisions for archiving in their e-journal licenses, either through direct deposit of content with the library or, more often, through third-party agencies.

E-journal archiving responsibility is distributed and elusive. Therefore, libraries, archiving organizations, publishers, and societies need to collaborate in developing and promoting best practices such as model license agreements and practical steps leading to the deposit of e-journal content with recognized preservation agencies.

Oya Y. Rieger, July 2016

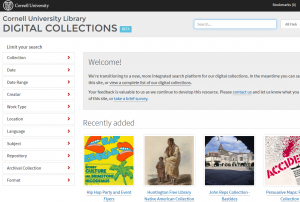

Assessing and Promoting Digital Collections as part of a DSPS Fellowship

At Cornell University Library, we are rich in digital collections. We have good workflows for identifying still and moving images that need to be digitized. We ensure that the groups of materials are discrete and that they are accompanied by sufficient metadata, then we digitize the materials, catalog them, and frequently build websites to promote the resulting images.

In Digital Consulting & Production Services (DCAPS), we know how to effectively create image collections within the library. What we have a lesser understanding of is how digital collections in our Digital Collections Portal are being used based on quantitative data (such as statistics on site use from Google Analytics and Piwik in combination with pop-up questionnaires) and qualitative data (such as focus groups and personal interviews), in combination with usability testing. Assessing how our digital collections are being used is one aspect of my DSPS Fellowship. From talking with members of the web team, it seems that assessment happens on a more ad hoc basis, and it would be good to have a systematic method of how to gauge the use of our collections on a regular basis.

Another component of this fellowship is examining how we promote our digital collections. We have incredible assets in the library that are of no use if patrons are unaware of them. It will be beneficial to have a general digital project promotion workflow. For instance, an excellent initiative has been entering information about collections into Wikipedia. If we can make a point of doing this for every new collection, we will have a wider user base for our images. We also highlight images from our digital collections on Instagram. I intend to create a checklist of how to spread the word about new collections after they are launched and on an ongoing basis as the second part of my fellowship.

Assessment and promotion are important phases of the digital curation lifecycle that are not specifically part of anyone’s role in DSPS and are crucial to the services we provide. Given the fact that DSPS recently created a digital curation unit, it is apparent that better serving the entire project lifecycle is a priority, and this fellowship will aid in improving these workflows.

From left to right: Marsha Taichman, DSPS Fellow, Jenn Colt and Melissa Wallace, DSPS Web Design and Development Team Members.

Developments at the HathiTrust Research Center (HTRC)

The HathiTrust Research Center has announced an important development towards the availability of the full HathiTrust corpus to scholars that use computational methods (“text-mining”) in their research. The press release also includes a timeline for future steps that will make the entire corpus of HathiTrust, regardless of copyright, open for scholars using computational methods.

The HathiTrust Research Center has announced an important development towards the availability of the full HathiTrust corpus to scholars that use computational methods (“text-mining”) in their research. The press release also includes a timeline for future steps that will make the entire corpus of HathiTrust, regardless of copyright, open for scholars using computational methods.

What is HathiTrust and the HathiTrust Research Center? The HathiTrust is a partnership of academic and research institutions, who continuously build a digital library together (currently over 14 million volumes) digitized from libraries around the world. If a book is not subject to limitations of copyright, it can be read online as well. Items that are subject to viewing restrictions are indexed in full, such that even though scholars cannot read them online, they can search on the text within the covers, and return both the titles of books that contain a given term, and also the page numbers of where these terms are found within a text. This full-text indexing can be leveraged for broader computational analysis (often called “text mining“) by scholars who find these methods useful. The HathiTrust Research Center (HTRC) is a joint effort of the Indiana University and the University of Illinois, who partner with HathiTrust to provide the software, infrastructure and computational scale for this scholarly method using the HathiTrust Digital Library as the primary source of text to be analyzed.

What exactly is the recent development, and who sees immediate benefit? The HTRC has provided the analytics portal to anyone who makes an account. In the portal, scholars can create a collection of their own, and run computational algorithms against that collection. The portal also offers the HTRC bookworm (open source software tied to a segment of the corpus), the data capsule (a virtual machine environment suitable for a scholar to load their own tools) and several data sets. With all of these tools, scholars have been limited to the segment of the corpus in the public domain. But beginning this summer, successfully funded Advanced Collaborative Support (ACS) proposals will pilot access to the full corpus (regardless of copyright status) in their projects. In real numbers, that means that instead of about 5 million books, the ACS scholars can work with the full 14+ million currently found in HathiTrust.

What is the timeline for future steps? The details are in the announcement, but briefly, the plans for the year ahead are:

- Immediately: Advanced Collaborative Services grant awardees will have access to full corpus.

- Fall 2016: A new features data set, derived from the full collection at both volume level and page level, will be released.

- Early 2017: Availability of full HathiTrust corpus through data capsule anticipated for general use.

Please consider me available for questions, concerns and guidance on getting started with the HTRC.

« go back — keep looking »