HathiTrust Research Center UnCamp2015

The HathiTrust Research Center (HTRC) held its third annual UnCamp over March 30 and 31. The HTRC has continued to demonstrate its commitment to the evolution of tools of tools and functionality of interest to scholars in the digital humanities, this year adding two additional tools to the environments, algorithms and datasets that it already offers. The main themes emerging throughout the conference, as evidenced in both the announcements of developments and through various conversations, were related to sustainability of the HTRC to be a continued presence in the scholarly sphere, and the adoption of the computational environment by more scholars in their work. The latter topic was both demonstrated throughout various presentations of scholars work, and engaged through discussion of ways in which the HTRC could improve its offerings to scholars by lowering barriers and enhancing adoption.

Cool Tools

The HTRC Bookworm is a tool very like the Google nGram Viewer but it leverages the HTRC indices instead of the Google data set as the data to be analyzed. Given the similarities between the two, it is not surprising that the same genius was at work in the development of both tools (Erez Lieberman Aiden and Jean Michel Baptiste, among others). The resulting Bookworm is available as an open source project (code available.) The HTRC’s implementation of Bookworm is more graphically oriented allowing from a fairly complex set of constraints to be set on the data through an intuitive and clean visual interface. Currently, it is limited to unigrams. There is a lot of interest in the conference to allow the HTRC Bookworm to be constrained to a collections of one’s own making, so it is likely that development of this feature will begin in the year to come.

The Data Capsule (located in the “portal” alongside the basic algorithms) also made its debut, along with a supporting tutorial. The data capsule is a virtual machine environment that a scholar can customize with various tools, lock, and then use to address the texts of the HTRC computationally. The scholar can then unlock and retrieve the results of the computation. Overall, this presents an environment that supports scholars using computational methods and also prevents reconstruction of the corpus or any given work in it. Currently, analysis is restricted to books in full-view, but the Data Capsule is engineered specifically for security that will allow scholar’s access to computationally address works in limited view as well. Legal machinery is already underway to pave the way for this important evolution.

Sustaining HTRC services

The HTRC has been spending considerably effort to grow from an experimental pilot to a reliable service. Although there is an open acknowledgement that this road will be a long one, there are some welcome developments that are good steps in this direction. HTRC has hired Dirk Herr-Hoyman (Indiana University – Bloomington) as Operations Manager, who is charge with bringing greater rigor to the HTRC service offerings (clearer versioning, increased security, responsive user help, clear development roadmaps, regular and documented/announced refreshes of primary data, etc.) Administrative development of an MOU between the HTRC and HathiTrust is another important step, leading to greater clarity of their separateness and relationship, and the roles and responsibilities of each in terms of data management and security.

Adoption of the HTRC services by scholars using computational methods was a central concern. Instance of use is a measure of relevance, and programs of greater relevance make a better business case to be worthy of our efforts. Throughout the conference, discussions of what might be needed to facilitate adoption of the HTRC service offerings by scholars elicited many concrete steps that could help:

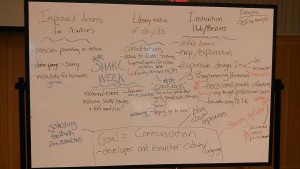

- User testing/UX of portal. Scholars and especially librarians feel that the user experience could benefit from some redesign. Currently there are at least four base URLs that lead to various tools and user experiences and documentation, and none of these have the same branding or interface design, leading to confusion on the part of scholars as to what HTRC is and what it is offering. It is equally unclear where to go for help on each tool, where to post questions, how to ask for features or development when one’s project is out of the current scope of a tool and requires some extension. There was some talk about rebranding the HTRC services as Secure HathiTrust Analytical Research Commons (SHARC) and placing all service offerings under that umbrella, as well as some immediate and longer term steps that might be taken to move things in a positive direction.

- Discussions also revealed the need to strengthen the link between developments at Google and how they affect HathiTrust and HTRC. Google improves their books periodically, developing image corrections and improved OCR at scale. (In fact, right now, they have recently released a new OCR engine, and are re-processing many books that have the long s, including fraktur. Early results look very promising.) These improved volumes are reingested into HathiTrust. These improvements can be better leveraged by:

- Improved communication between HathiTrust and HTRC on improvements underway. The Google Quality Working Group might be a good place to coordinate some of this information. If these updates are systematically conveyed to HTRC, they will direct precious resources into other efforts.

- Updates of the data HTRC receives from HathiTrust that are coordinated with major releases of material re-processed by Google.

- Effort should be directed to relationships. The suggestions in this conversation were that in the short-term, HTRC might supply more advising on grants and the grant process that would leverage HTRC services. In the mid- to long-term, HTRC might seek international partnerships and relationships. Also in the mid- to long-term, HTRC might leverage librarians and scholars as ambassadors to professional societies to raise awareness.

Scholarly Projects

There were two keynotes that described scholarly projects and nine projects touched upon in lightning rounds.

Michelle Alexopoulos, “Off the Books: What library collections can tell us about technological innovation…” Michelle shared her perspective as an economist working with HTRC data to discover patterns of the time between the invention of a technology and its adoption, and describe the economic impacts, as well as the ways in which a specific technology might impact other technological developments. Her project employed algorithmic selection of a large corpus based on MARC attributes, and Bookworm/nGram data.

Erez Lieberman Aiden, “The once and future past” Erez was on the original team of people who created the nGram viewer code and coined the term Culturomics to describe the intersection of patterns they were seeing between culture and trends revealed through algorithmic analysis of texts. He recapped the scholarly impact of the nGram viewer, its open source successor (Bookworm), and the provocative notion that we can use this data predictively as well as retrospectively.

Lightning rounds included Natalie Houston and Neal Audenaert’s “VisualPage: A Prototype Framework for Understanding Visually Constructed Meaning in Books”. Natalie also visited Cornell to present and discuss about her work on 4/16 as a part of the Conversations in the Digital Humanities series.