Data Vet College Style

Using AWS Redshift, AWS Batch, NodeJS and Docker to accomplish data in the cloud

Team Members: Craig Riecke, Daniel Sheehan and Scott Ross (sr523)

In 2015, the Animal Health Diagnostic Center at Cornell University changed its laboratory information management system implementing VetView as a replacement to the legacy system- UVIS. With limited time, budget and resources, our team was forced to deploy our main business intelligence tool, Tableau, directly over VetView’s operational data model, which used Oracle. While this worked initially giving our business staff access to data, we quickly realized that we were outgrowing the inherent constraints implicit on having Tableau on an operational data model. These constraints included speed/performance issues, business logic embedded in sql, duplication of sql across various tableau dashboards and data silo’ed in that operational model. At the end of the day, we needed something faster, reduced complexity for our report writers, and a building block for the future.

This blog post is the story of how the VMIT development team moved data to Redshift, a discussion on Redshifts capabilities and the tooling used to move data.

Redshift:

Pros:

- It is affordable to start: I am not saying that it is affordable, period. Redshift can get expensive if you shove all your data into it, and your data is growing fast. That said, compared to its on-premises and appliances-based alternatives, Redshift is incredibly cheap to start with at $0.25 per hour or $180 per month. We also had a 30 day free trail.

- It is mostly PostgreSQL: Redshift has a different query execution engine, but in terms of the interface, looks like Postgres. This means it has rich client tools and connectivity can be done via both JDBC and ODBC.

- It plays nice with Tableau: Because of the above, it plays very nicely with Tableau and other BI tools. This is incredibly important for our group because we are targeting BI tools.

- Scalability: it’s horizontally scalable. Need it to go faster? Just add more nodes…

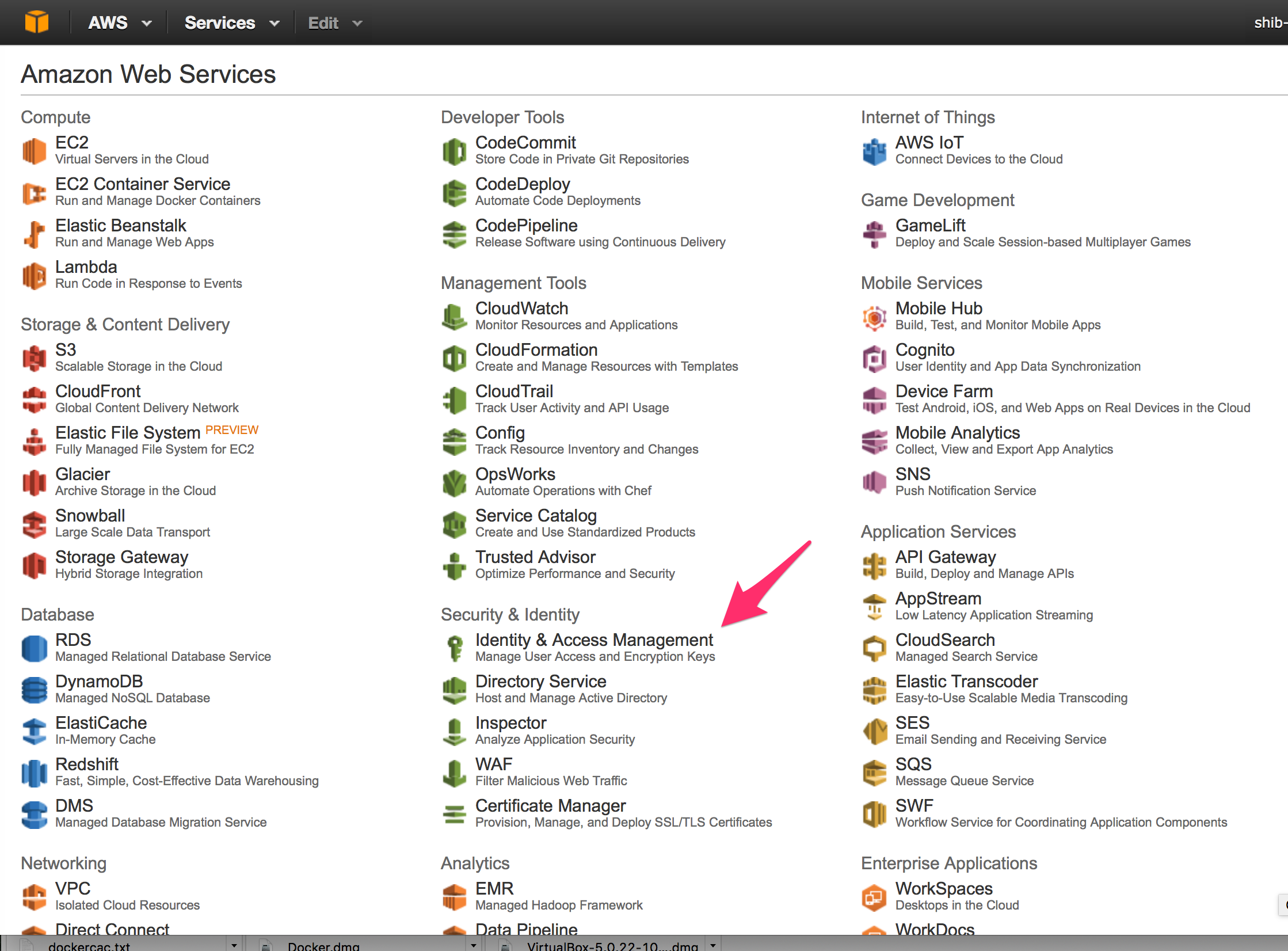

- AWS: It’s part of the AWS ecosystem. So while other tools, like Google’s BigQuery, are interesting, we have settled on AWS as our platform for infrastructure.

Cons:

- Loading data can be tricky: The process we are using to load data into redshift requires extracting the data from our source database, creating a csv file, loading that csv file to S3, then using the COPY command to get data into redshift. Parallel processing is very important in this step to reduce the amount of time it takes to do the ETLs. It does take time to master this process.

Things to think about:

- Distkey/Sortkey required for optimal performance. This is a new concept for our developers group, and took us some time to think about it (and will require time as we move into the future)

- Compute and storage are tied together. There are some interesting talks about how this is an anti-pattern for data warehousing. For us, this doesn’t matter at the moment.

- Mutli AZ is painful

Decision:

At the end of the day, we were basically were comparing Redshift to other RDBMS solutions hosted in AWS RDS. We settled on Redshift more because of our future needs, than our current needs. And so far, we love it.

Setting up Redshift:

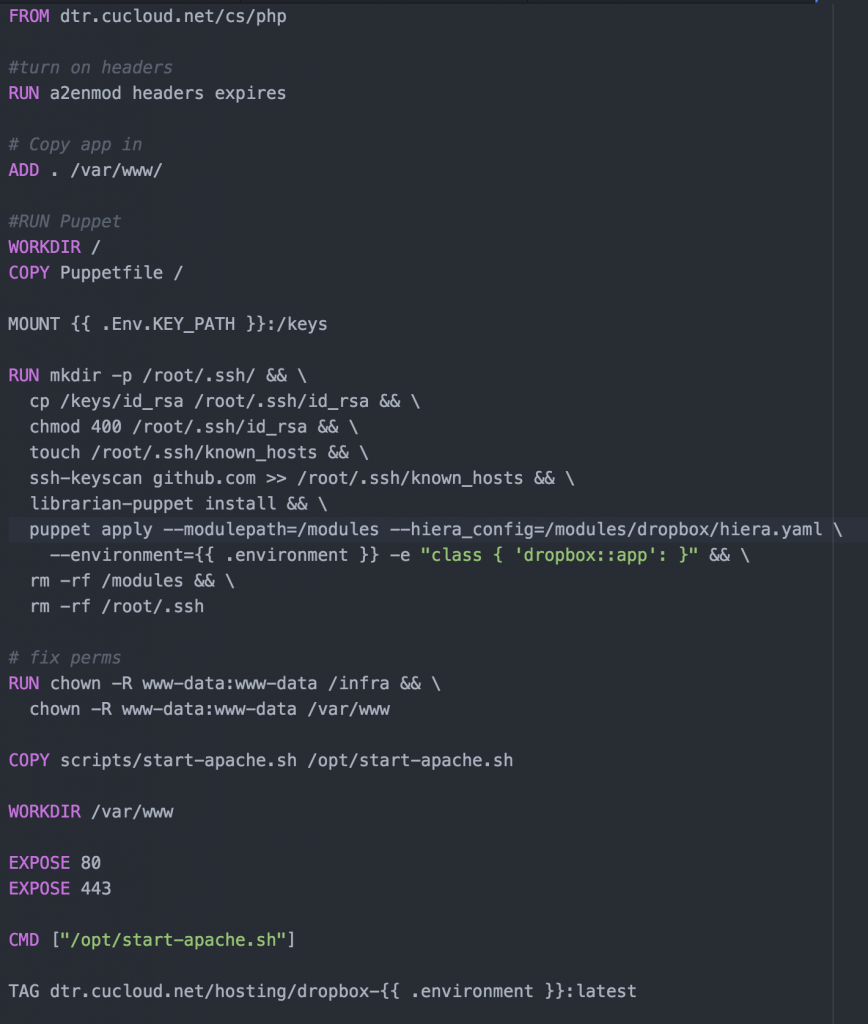

One of the tenants of our developer group when building solutions is to implement infrastructure as code . In that vein, here are the steps to build the redshift cluster along with the json configuration to make it happen:

Steps:

- Install the aws cli

- Run this command: $ aws redshift –cli-input-json `cat cluster.json`

- Create users / schemas

The output of the above should give you the endpoint of your cluster. Use that information to connect your SQL tool to the new Redshift cluster. Amazon recommends SQL Workbench/J. But other tools work just as well (DBVisualizer).

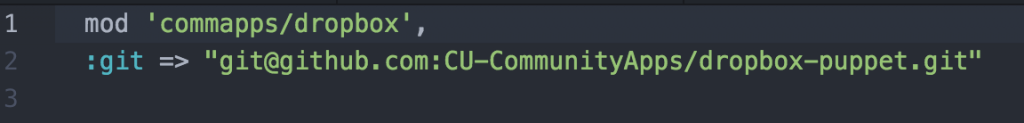

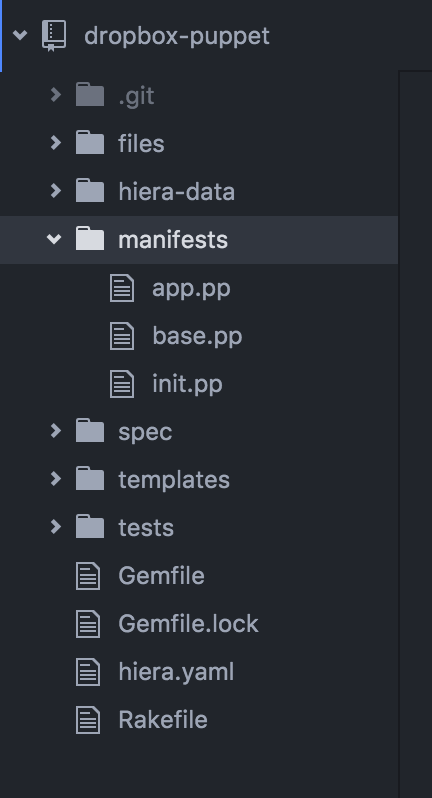

* All of these steps are stored in our git repository on Bitbucket. We can automate this using Jenkins, but in this case, we really don’t have a need to have Redshift creation be automated. However, if we have to rebuild this cluster, we will rebuild it from these scripts. We do not use the aws console to make changes on the fly to systems.

Writing ETL Process:

Here were our requirements for an ETL tool:

- It had to be maintainable as code (visual coding environment were eliminated, including our Orion Health Rhapsody Interoperability engine)

- It needed to be able to do parallelism

- It needed to work with Docker

- Preferably the tool/language fit our current ecosystem

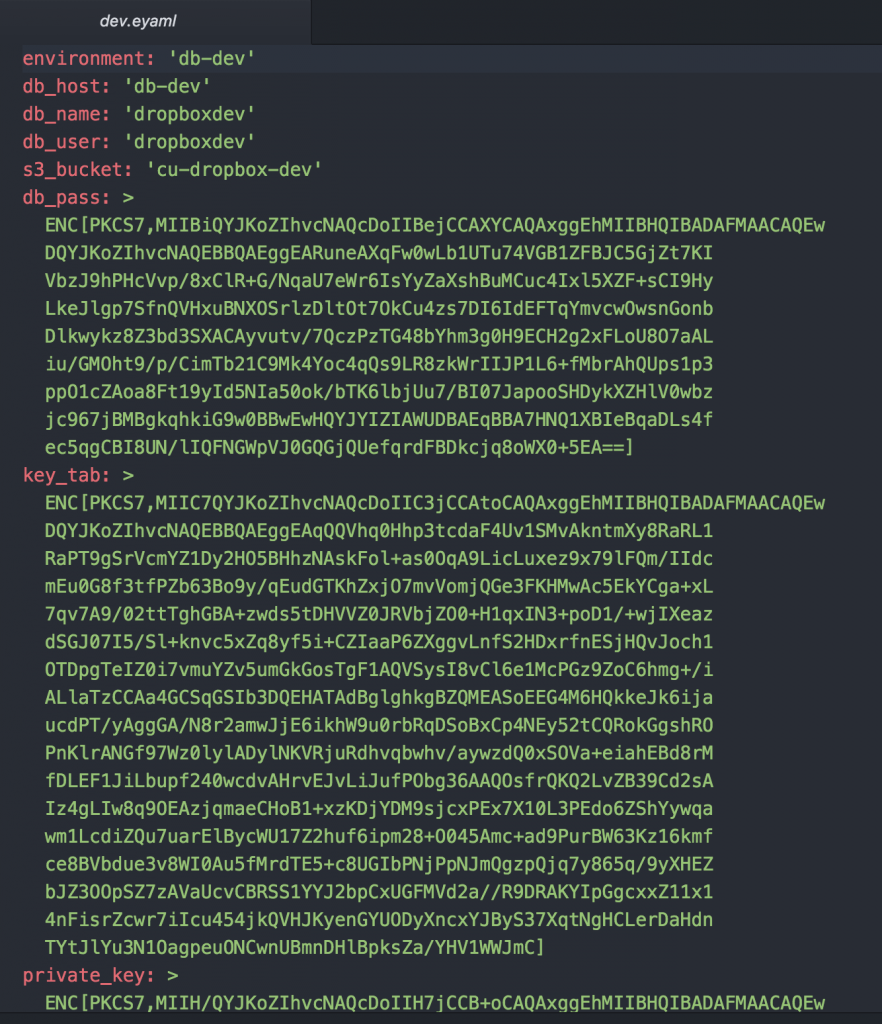

- We also wanted to handle secrets gracefully.

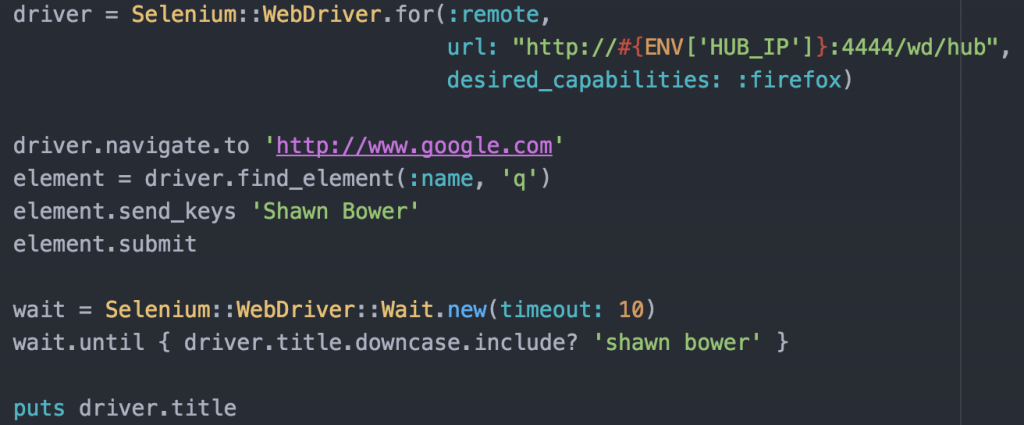

So we landed on NodeJS and specifically TypeScript to do our ETL. NodeJS is being used as our API language of choice, is easy to run in a docker container, and we also had code available for reuse. TypeScript adds types to javascript, and easy way to make writing code more bearable then JavaScript.

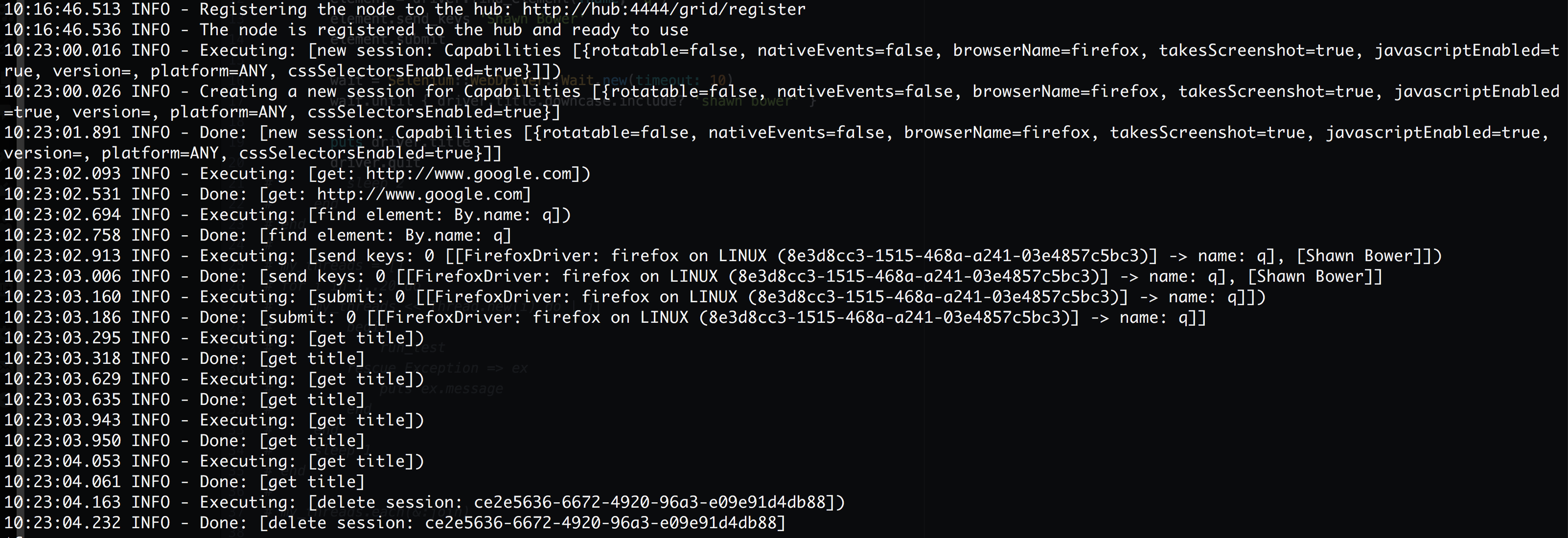

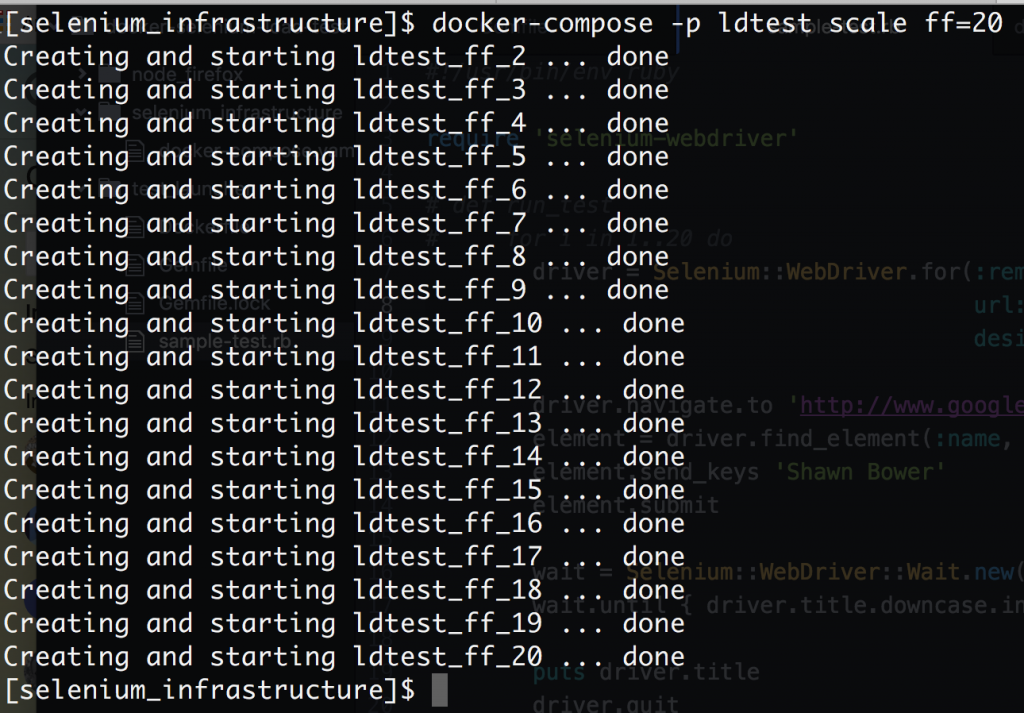

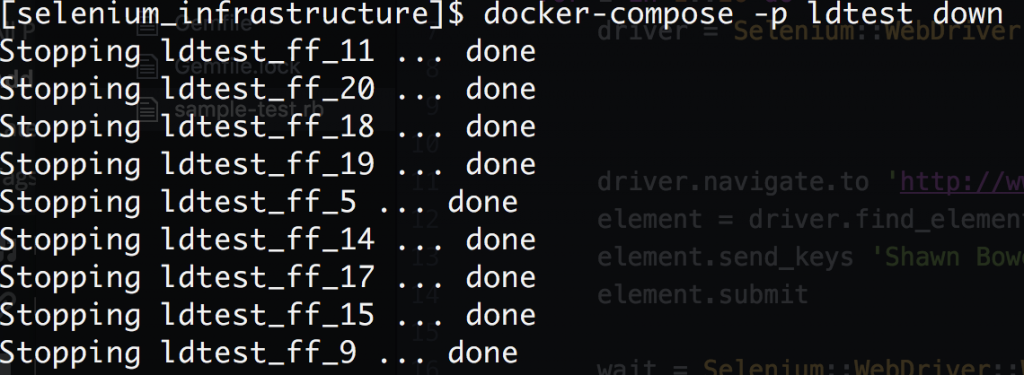

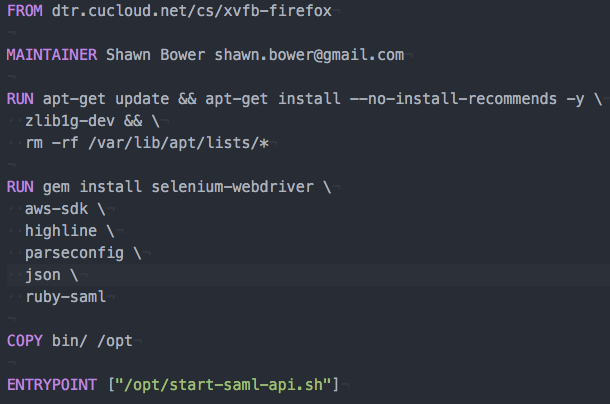

Again, this was stored in our git repository. We wrap the entire etl process into a docker container.

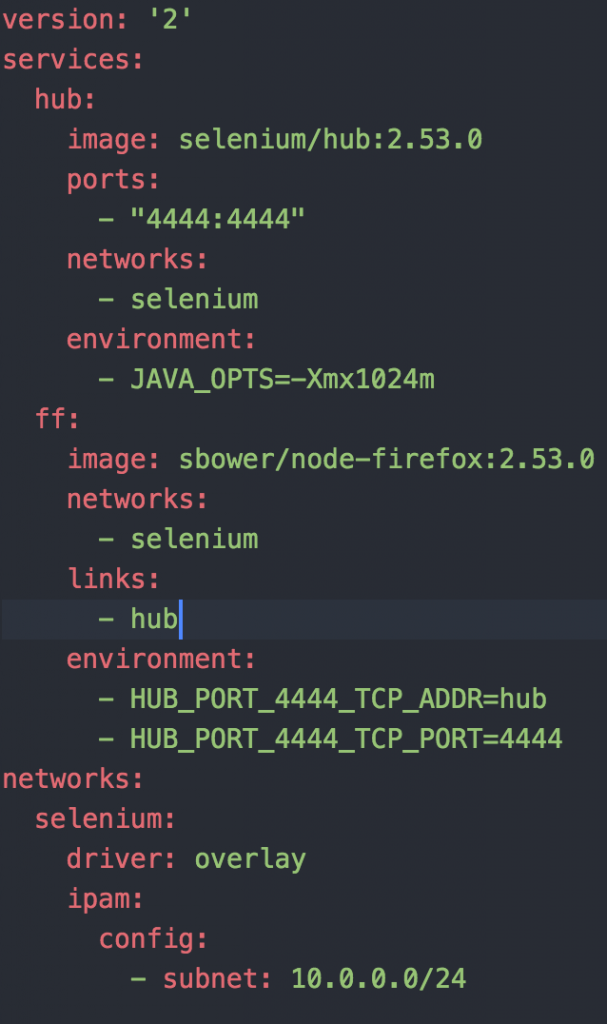

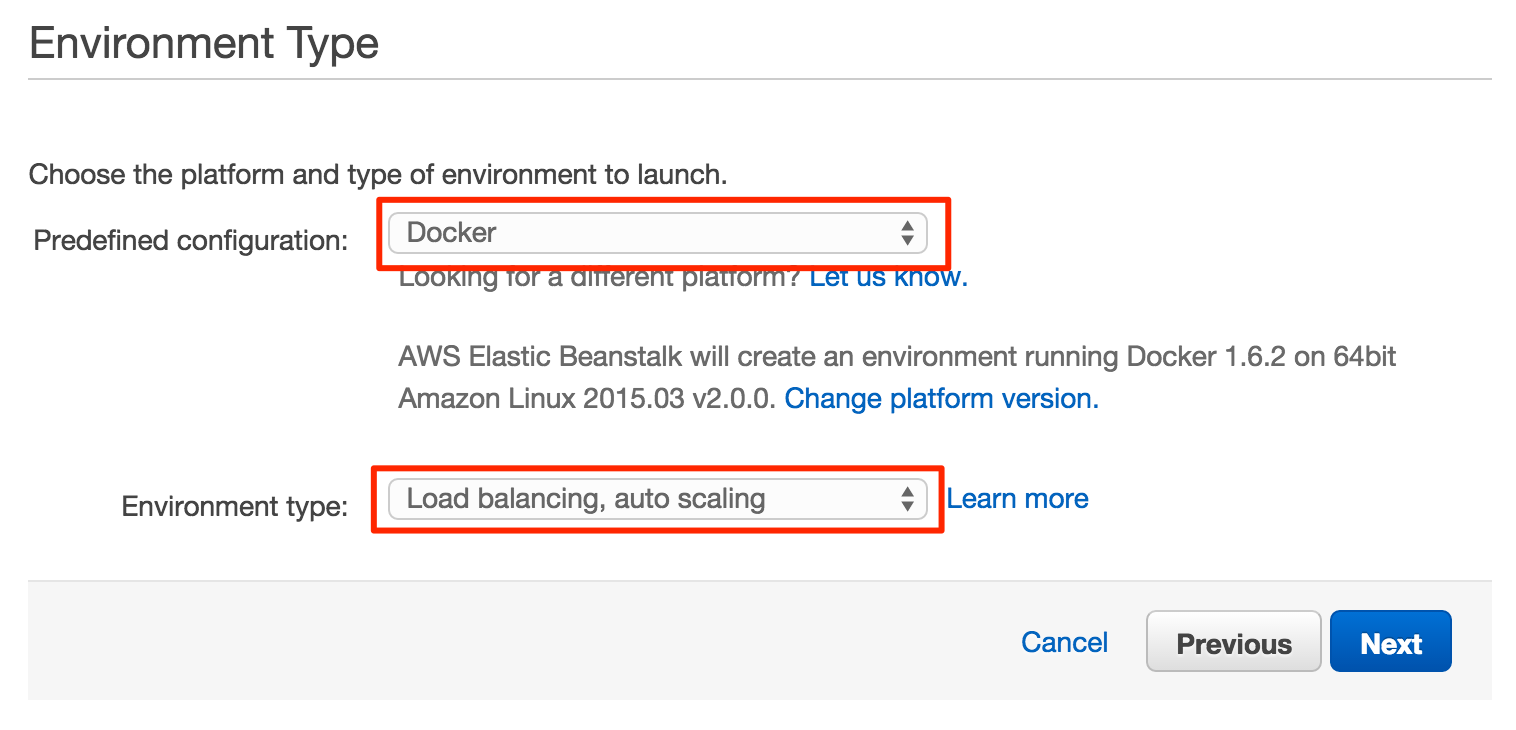

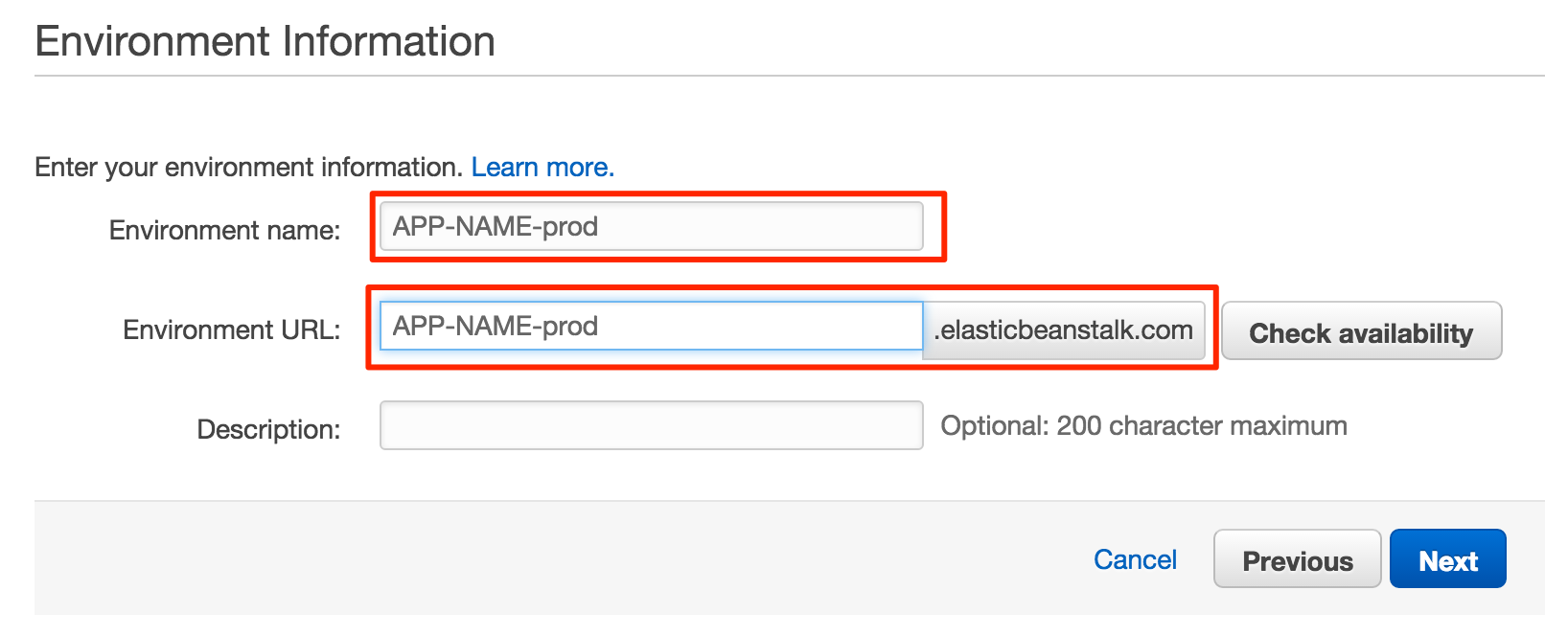

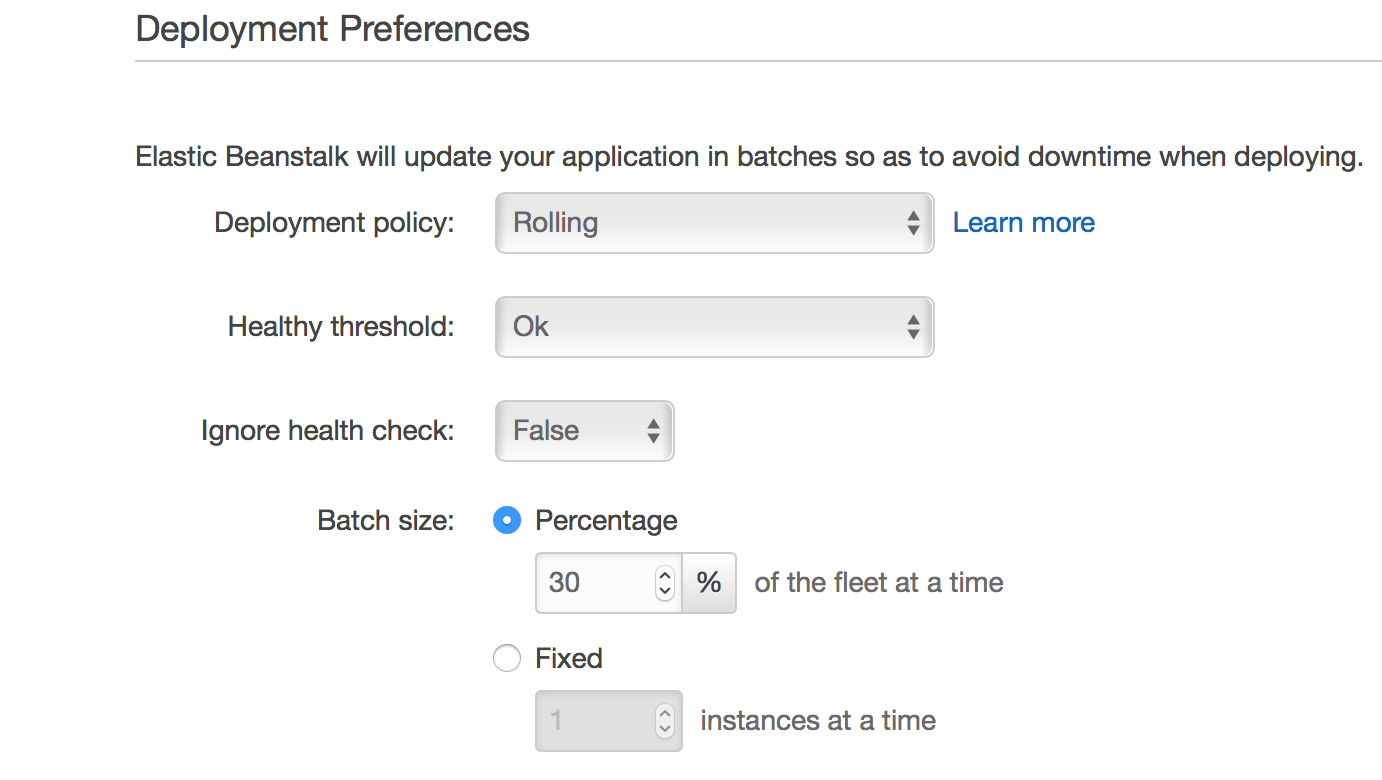

Using AWS Batch and Docker for the production pipeline:

The final piece of the puzzle is to setup a pipeline to get data into redshift using the ETL process. Here we decided to use a newer service from AWS called “Batch.” Batch has these concepts baked in:

- Jobs represent a unit of work, which are submitted to Job Queues, where they reside, and are prioritized, until they are able to be attached to a compute resource.

- Job Definitions specify how Jobs are to be run. While each job must reference a Job Definition, many parameters can be overridden, including vCPU, memory, Mount points and container properties.

- Job Queues store Jobs until they are ready to run. Jobs may wait in the queue while dependent Jobs are being executed or waiting for system resources to be provisioned.

- Compute Environments include both Managed and Unmanaged environments. Managed compute environments enable you to describe your business requirements (instance types, min/max/desired vCPUs etc.) and AWS will launch and scale resources on your behalf. Unmanaged environments allow you to launch and manage your own resources such as containers.

- Scheduler evaluates when, where and how to run Jobs that have been submitted to a Job Queue. Jobs run in approximately the order in which they are submitted as long as all dependencies on other jobs have been met.

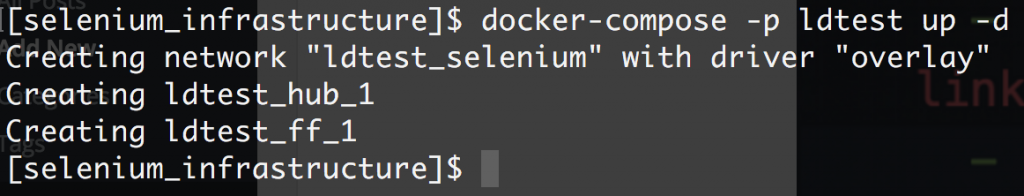

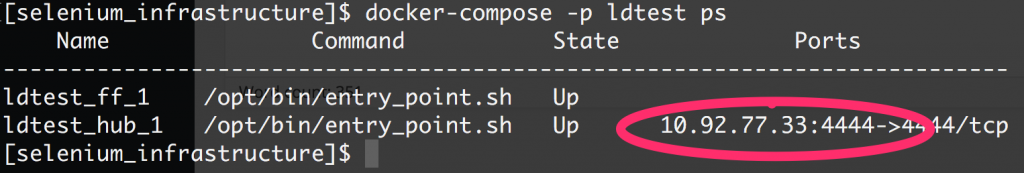

To use, we just give Batch a docker image using AWS ECR (this started an internal debate about how we use ecr vs. CU dtr vs. docker hub — something for another day).

To kick off a batch job from aws cli for one time run:

$ aws batch submit-job –job-definition vet-dw-etl-sb –job-queue normal –job-name vet-dw-etl-sb-2016-01-10

To create a batch job on AWS:

$ aws batch create-compute-environment --cli-input-json file://compute-environment-normal.json $ aws batch create-job-queue --cli-input-json file://job-queue-normal.json $ aws batch register-job-definition --cli-input-json file://vet-dw-etl-sb-job-definition.json

The json file references our docker image on amazon’s docker registry, that image contains our etl code.

For now, we run this nightly but we have the option of running this at any interval. Our etl process takes about ~40mins for 30million rows.

**If anyone is interested in seeing how json files for creating batch jobs or our redshift environment, please let us know. Ditto for any code.