by Paul Allen

As Cornell units consider moving various software and services to the cloud, one of the most common questions the Cloudification Services Team gets is “What is the network bandwidth between cloud infrastructure and campus?” Bandwidth to cloud platforms like Amazon Web Services and Microsoft Azure seems critical now, as units are transitioning operations. It’s during that transition that units will have hybrid operations–part on-premise and part in-cloud–and moving or syncing large chunks of data is common.

Physical Connectivity

As of June 2016, the main Cornell campus was connected to the public Internet by two physical trunks, each with 10Gbit/s of capacity. Most parts of the campus backbone network also run at 10Gbit/s, usually with multiple paths between any two backbone locations. The campus is also connected with Amazon Web Services us-east-1 region by AWS Direct Connect over two physical connections, one running at 1Gbit/s and a backup running at 100Mbit/s. Only specific AWS accounts are setup to use Direct Connect and those are usually configured so that only 10-space traffic is routed between private addresses in AWS and on campus. Communications between public IPs on campus and public AWS and Azure IPs would use the public internet.

I performed these benchmarks using a recent vintage MacBook Pro connected to the campus network over a 1Gbit/s wired connection. The laptop had a 10-space address that was NAT’ed to a public IP address when I need to connect to the internet. Therefore, when running benchmarks to AWS, this configuration used the AWS Direct Connect when the target was a private IP address (10-space) in AWS, but used the public internet for public IP addresses in AWS and Azure. I benchmarked the laptop against our campus speed test system and got 796 Mbit/s (100 MB/s) upload and 862 Mbit/s (108 MB/s) download.

Benchmarks

I used iperf3 for the benchmarks, using the laptop as the client and the AWS/Azure linux servers as the server. In AWS, I used an c3.8xlarge instance which is one of the smallest instances to sport a 10Gbit/s networking. (However I did not specifically configure or ensure that it was using enhanced networking). The 10Gbit/s interface on the instance just ensures that the instance network interface wasn’t a bottleneck.) The AWS instance was launched in the us-east-1 region. In Azure, I used a Standard_A4 instance size which offers “high” bandwidth, though it is unclear how that relates to maximum obtainable network speeds. The Azure instance was launched in the US East region. I used RedHat 7.1 as the OS in both Azure and AWS.

On the servers I ran the following command:

iperf3 -s

Each benchmark ran for 60 seconds, with 1, 2, 4, or 8 clients in parallel. On the laptop, the command was:

iperf3 -c server_ip_address --verbose --time 60 --interval 10 --parallel n

I generally ran each combination only once, but when a combination was run more than once, I averaged the results. I ran each test sequentially.

Results

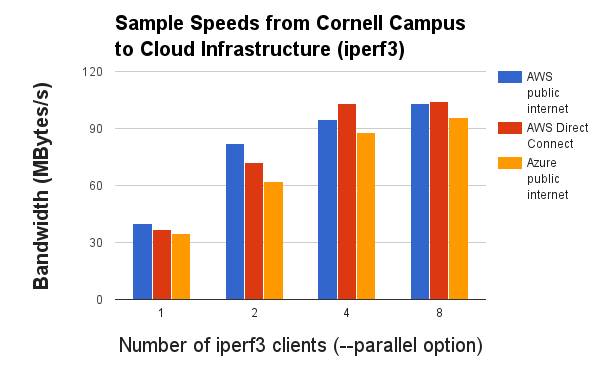

Benchmark 1-client 2-client 4-client 8-client (MB/s) ---------- -------- -------- -------- -------- AWS public internet 40 82 95 103 AWS Direct Connect 37 72 103 104 Azure public internet 35 62 88 96

Note that the test configuration did not hit any bottlenecks until the 4- and 8-client benchmarks. With that configuration, numbers were beginning to reach the theoretical maximum 125 MB/s speed of the wired 1Gbit/s network connection of my laptop and the measured speed from my laptop to the campus backbone (as shown by the Cornell Speed Test). That same theoretical limit would apply to our current 1Gbit/s AWS Direct Connect connection. Given the speeds attained by the benchmarks at the 4- and 8-client levels, it’s clear that there currently isn’t much other traffic on Cornell’s Direct Connect to AWS, otherwise my benchmark numbers would have been impacted by that traffic.

It’s notable that the bandwidth supported by the Azure bandwidth consistently lagged behind both AWS configurations. We’d have to delve into that further to see if that might be because of the instance type used here, or due to some other reason without or beyond our control.

It’s good to have these numbers to use as reference and to see how they change in the future. A lot of factors come together to generate these numbers (including e.g., the campus firewall). Next time, I’ll have to get hold of a server on campus with 10Gbit/s connectivity to ensure that my itty-bitty laptop isn’t impacting the results.